How Websites Happen, Part Two: Optimizing Performance

February 27, 2019

In the previous post, I walked through the details of the critical rendering path. It explained how an HTML file goes from arrival at the browser to its visualization on the page.

For the second half of the topic, I’ll focus on a few ways developers can reduce the time and cost associated with these steps, making for a more performant, enjoyable user experience as a result.

To better illustrate the ideas, I’ve prepared a simple app in (mostly)* vanilla JavaScript.

You can see the demo app here, or view/clone the source on GitHub and skip the next section if you don’t care to read details about the example site.

Exploring the Site

The repository for this project provides two examples: one basic site and one optimized. Because the project is relatively straightforward (mostly Web Component declarations), I’ll walk through only the core modules before explaining the optimization steps.

The first module of focus is the base class from which all components, pages, and core app logic is derived.

./basic/src/base/index.js:

import { render } from '/node_modules/lit-html/lit-html.js'

class Base extends HTMLElement {

constructor() {

super()

this.attachShadow({ mode: 'open' })

}

connectedCallback() {

this._render()

this.onMount()

}

updateTpl() {

this._render()

}

disconnectedCallback() {

this.onUnmount()

}

dispatch(event, detail) {

this.dispatchEvent(

new CustomEvent(event, { detail, bubbles: true, composed: true })

)

}

getChild(qry) {

return this.shadowRoot.querySelector(qry)

}

getChildren(qry) {

return this.shadowRoot.querySelectorAll(qry)

}

_render() {

render(this.tpl(), this.shadowRoot)

}

/*abstract*/ onMount() {}

/*abstract*/ onUnmount() {}

/*abstract*/ tpl() {}

}

export default BaseIf you’re familiar with Web Components, much of the above should look familiar. If not, I’m writing a few semantic wrapper functions for the lifecycle methods extended from HTMLElement.

If you haven’t checked it out yet, lit-html is a lightweight, intuitive library from the Polymer team that makes templating a breeze. It also just hit its first stable release, so it’s worth taking a look.

Now that we’ve seen the base class used across the project, let’s take a look at the main app module:

./basic/src/app.js:

import { html } from '/node_modules/lit-html/lit-html.js'

import { unsafeHTML } from '/node_modules/lit-html/directives/unsafe-html.js'

import Base from './base/index.js'

import registerComponent from './common/register-component/index.js'

import routes from './common/routes/index.js'

import './components/v-router/index.js'

import './pages/page-one/index.js'

import './pages/page-two/index.js'

class VApp extends Base {

constructor() {

super()

this.navigate = this.navigate.bind(this)

}

onMount() {

let page = location.pathname.substr(1)

this.setActivePage((page && this.isRegistered(page)) || 'v-page-one')

this.shadowRoot

.querySelector('#root')

.addEventListener('nav-changed', ({ detail: { route } }) =>

this.navigate(route)

)

}

navigate(route) {

this.setActivePage(route)

}

setActivePage(page) {

if (!page) return

const pageTag = `<${page}></${page}>`

this.htmlToRender = html`

${unsafeHTML(pageTag)}

`

this.updateTpl()

}

isRegistered(page) {

return routes.indexOf(`v-${page}`) > -1 ? `v-${page}` : false

}

tpl() {

return this.htmlToRender

? html`

<style>

#root {

max-width: 1200px;

margin: 0 auto;

}

</style>

<div id="root">

<v-router></v-router>

${this.htmlToRender}

</div>

`

: ``

}

}

registerComponent('v-app', VApp)The app module’s primary concern is maintaining control of the application’s viewport, namely which page is currently being displayed. Whenever a new page is requested, the module checks to see if it has a corresponding component to render for that route. If not, it defaults to displaying the homepage.

The final line of the file (and every file with a Web Component declaration in the project) takes care of registration:

./basic/src/common/register-component/index.js:

export default (txt, className) => {

if (customElements.get(txt)) return

const register = () => customElements.define(txt, className)

window.WebComponents ? window.WebComponents.waitFor(register) : register()

}After fleshing out a few more components and examining the project in the browser, it’s noticeable that this small site with a few pages comes with some performance costs.

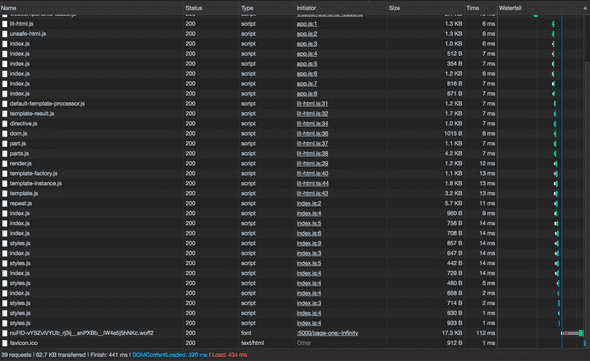

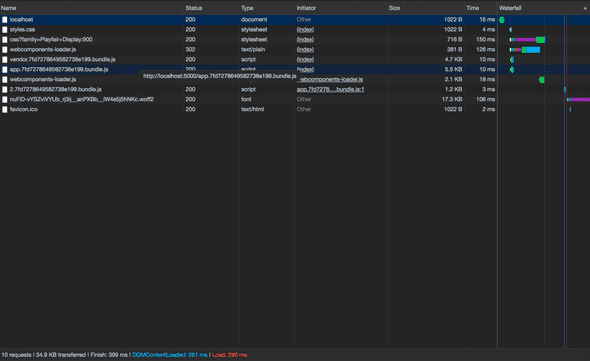

A closer look at the “network” tab in Chrome’s DevTools offers insight into this idea:

As you can see (click the image to expand if needed), simply loading the page in the browser resulted in almost 40 resource requests and about 63 KB sent from the server.

While the size isn’t massive, it’s also just a simple app with hardly any content. Adding more images, API calls, and boilerplate pages would easily double or triple this number.

Upon closer inspection, we can see that many of the file references are my Web Component declarations—many of which aren’t even used on the first page. No good!

In order to get this app in better shape, let’s take a look at a few of the optimization techniques available.

First Things First: Check Your Images

Before delving deep into config options and code tweaks, it’s worth mentioning that unchecked images are often the cause of bulky page sizes.

The easiest way to reduce image size is to compress them. There are a few different options online, but I enjoy Compressor IO for my optimization needs.

It’s also beneficial to prefer JPEG over PNG assets for photos and other complex images. This is because of the compression algorithm JPEG uses, lossy, which removes pixel data that’s redundant to save memory (unlike PNG, which is lossless and preserves pixel data).

Once you’ve made a pass through the assets referenced on your page, it’s time to look at code-side optimizations worth making.

Module Bundler

If you’ve made it this far, you’ve either seen the skeleton of the example application or have a general idea of the issue at hand: building a site using mostly JavaScript while keeping the browser’s requests light and as few as possible.

One of the most powerful tools available to help us accomplish this task is a module bundler. In short, it enables us to package all our app logic together in one file to minimize the number of requests needed to render our app.

Although the race has tightened recently with offerings like Rollup and Parcel, The leading solution for module bundling for the last few years has been Webpack.

In its simplest usage, it’ll parse our JavaScript files from before—marking all dependencies along the way—and combine them into a single file that gets injected into index.html after the build process.

In order to use Webpack in our project, we’ll need to install a few dependencies:

yarn add webpack webpack-dev-server html-webpack-pluginTo be clear, webpack-dev-server is what we’ll use to help preview our app during development, and html-webpack-plugin enables the script injection described earlier.

Now that we have the dependencies installed, let’s create a simple Webpack config in the project’s root.

./optimized/webpack.config.js:

const webpack = require('webpack')

const { resolve } = require('path')

const HtmlWebpackPlugin = require('html-webpack-plugin')

module.exports = {

context: resolve(__dirname, 'src'),

entry: {

app: './app.js',

},

output: {

filename: '[name].bundle.js',

path: resolve(__dirname, 'dist'),

},

devServer: {

hot: true,

publicPath: '/',

historyApiFallback: true,

},

plugins: [

new webpack.HotModuleReplacementPlugin(),

new HtmlWebpackPlugin({

template: resolve(__dirname, 'index.html'),

}),

],

}Note that the Webpack file syntax uses CommonJS (all those require’s at the top), which is different from the ES Modules we’ve used previously. For a refresher on the different module systems, this article is a fantastic guide.

Looking deeper at the config above, we identify the entry point of our application, or app.js as we linked in our index.html previously, and define a location and file name for the eventual bundled output (app.bundle.js, in this case).

We also add a few configs for the dev server, specifically where to find our root HTML file, as well as the option to use historyApiFallback for redirecting to our index.html file on page refresh. Otherwise, the browser will request the HTML file from the server at the wrong location, and we’ll get an ugly 404 error 😳.

Finally, the HTMLWebpackPlugin allows us to customize the index.html file created during the build by pointing to a template.

With our Webpack file in place, go ahead and run:

webpack-dev-server --mode developmentto make sure your dev server is working. If everything looks good, you’re ready to build!

webpack -pWhen the build is finished, navigate to the newly created dist/ directory and launch a static server. I like to use serve:

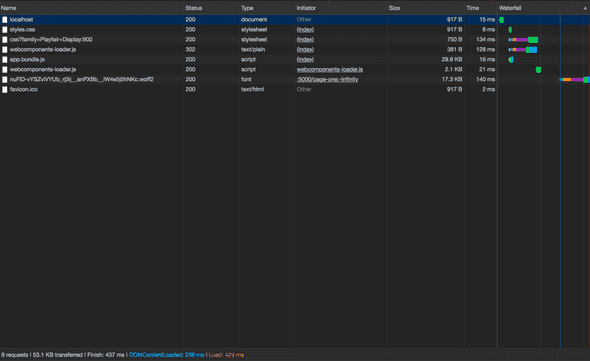

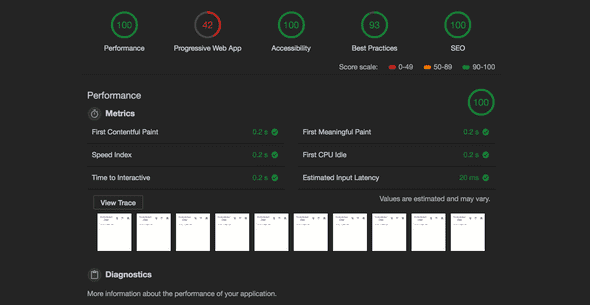

serve --singleIf you inspect the resulting page in the network tab again, you should see all those scripts folded into app.bundle.js!

As the image shows, the requests dropped to a measly eight, and the total page size is now 53.1 KB.

This is good, but we could do better. Notice upon inspecting the app.bundle.js file that components are loaded that aren’t used on the page, like v-page-two and v-img-container.

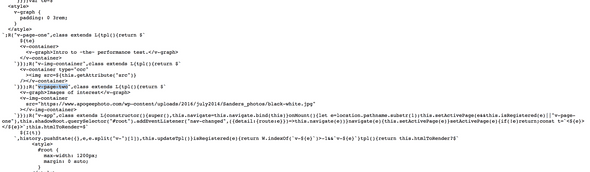

./optimized/dist/app.bundle.js:

To fix this, we’ll use another tool on the modern web’s workbench: code splitting!

Code Splitting

One of the most useful features that Webpack offers is programmatically loading modules inline rather than requiring static imports at the top of the dependent file. This is referred to as code splitting.

Using this feature is simple. Let’s head back to the app.js file and refactor the code to use this feature.

./optimized/src/app.js:

/// ... Vapp class from before ...

async onMount() {

let page = location.pathname.substr(1)

await this.setActivePage((page && this.isRegistered(page)) || 'v-page-one')

this.shadowRoot

.querySelector('#root')

.addEventListener('nav-changed', ({ detail: { route } }) => this.navigate(route)

)

}

navigate(route) {

this.setActivePage(route)

}

async setActivePage(page) {

if (!page) return

let prettyName = page.split('v-')[1]

const pageTag = `<${page}></${page}>`

this.htmlToRender = html`

${unsafeHTML(pageTag)}

`

history.pushState({}, page,prettyName)

// Here's the interesting part ...

await import(`./pages/${prettyName}`)

this.updateTpl()

}

// ...First, we remove the two page component references in app.js. Then, we use async/await to

- wait for setActivePage() to finish, where our dynamic import will happen, and

- wait for the module to import before updating the markup.

Now, our page modules will only be requested from the browser when we navigate to their corresponding page.

Another useful split we can make is at the bundle level. Rather than having to update our app whenever a dependency reaches a new version, we can separate the packages in our nodemodules directory into their own bundle that’s referenced in _index.html. To do this, we add the following to our Webpack config:

./optimized/webpack.config.js:

// ... webpack configs ...

optimization: {

splitChunks: {

cacheGroups: {

default: false,

vendors: false,

vendor: {

name: 'vendor',

chunks: 'all',

test: /node_modules/,

priority: 20,

},

},

},

},

// ... webpack configs ...With a few areas of our code split out, et’s check DevTools again to see our progress:

Cutting the content delivered down by ~56% (to 34.9 KB) from the original size is quite an achievement, but we can still do better. To gain a little more insight, let’s head over to another section of Chrome’s DevTools: the Audits tab.

Auditing Performance

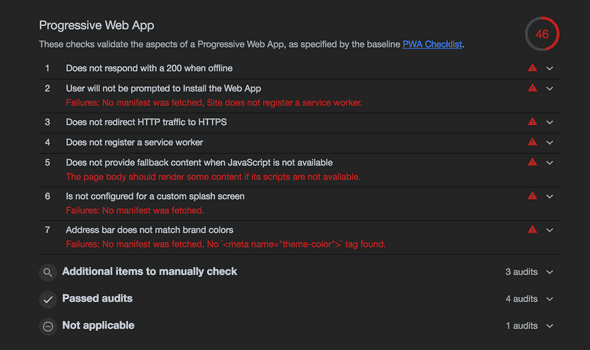

When we run an audit on the site, we see a lot of stellar scores with a glaring outlier:

Yikes, someone bombed a section of the test! Let’s drill in and see what’s causing the poor marks:

The section in question, Progressive Web App, measures how gracefully the site handles network loss and caching through the use of a service worker. Although some of these suggestions might seem like overkill, it’s worth peppering them into an application to adhere to best practices.

Manifest.json

The easiest task I spot on the list is creating a manifest.json file. If you’re not sure why this file is useful, you can read more about it here.

In short, it provides the browser with information about your page to supply to users when saving the site to their devices. Let’s create one now.

./optimized/manifest.json:

{

"short_name": "perf-zone",

"name": "Performance Zone",

"icons": [

{

"src": "/icons/site-icon.png",

"type": "image/png",

"sizes": "192x192"

},

{

"src": "/icons/site-icon-512.png",

"type": "image/png",

"sizes": "512x512"

}

],

"start_url": "/",

"background_color": "#000000",

"display": "standalone",

"theme_color": "#000000"

}Being JSON, many of the properties are self-documenting. You set a few name variables, identify icons and theming to use for the app, and fill in a few other configs.

One important property to set is “start_url”. It’s the page the browser will direct users to when they launch your app.

The “standalone” setting on the “display” property will launch the site without an address bar/tooling so it looks and feels like a native app.

In order for the browser to know about this file, I include a reference in my index.html file template:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<meta name="description" content="Sample site" />

<meta name="theme-color" content="#000000" />

<link rel="manifest" href="/manifest.json" />

<meta http-equiv="X-UA-Compatible" content="ie=edge" />

<title>Vanilla Site</title>

<link

href="https://fonts.googleapis.com/css?family=Playfair+Display:900"

rel="stylesheet"

/>

<script src="https://unpkg.com/@webcomponents/webcomponentsjs/webcomponents-loader.js"></script>

</head>

<body>

<v-app></v-app>

<noscript

>If you don't allow JavaScript, you're going to have a bad time

here!</noscript

>

</body>

</html>I also threw in a noscript tag to appease the browser’s fallback content request.

Next, I installed copy-webpack-plugin and created some icons and a simple robots.txt file so my new config files make their way into my build directory when I’m ready to deploy:

// ...webpack config...

plugins: [

new CopyWebpackPlugin([

{

from: './static',

to: './',

},

]),

],

// ...webpack config...Now with fallback content, manifest.json, and a robots.txt served, it’s time to tackle the real culprit of the poor grade: the service worker.

Service Worker

Service workers are scripts that run in the background of your application, separate from the app logic used for the site itself. This is useful for features such as caching, push notifications, and offline asset delivery. For more information, Google has published a thorough overview.

For our purposes, we’ll implement a basic example that gives us the features Google requires to pass its audit.

./optimized/src/static/sw.js:

self.addEventListener('install', function(e) {

e.waitUntil(

caches.open('perf-zone').then(function(cache) {

return cache.addAll([

'/',

'/index.html',

'/app.bundle.js',

'vendor.bundle.js',

])

})

)

})

self.addEventListener('fetch', function(e) {

e.respondWith(

caches.match(e.request).then(function(res) {

return res || fetch(e.request)

})

)

})The service worker created consists of two event listeners. The first creates a new cache and adds our important resources (the index file as well as our app and vendor bundles).

Next, we listen for fetch events and intercept them with the corresponding logic. If the content exists in the cache, serve it, otherwise continue the fetch request.

This is how we’re able to still serve the site even if the user is offline; they’re pulling the necessary resources from their device rather than a network request.

After the file is created, I reference it in index.html.

./optimized/index.html:

<script>

if ('serviceWorker' in navigator) {

navigator.serviceWorker.register('/sw.js').then(function() {

console.log('Service Worker Registered')

})

}

</script>After a simple check for browser compatibility, we register the service worker and print a message to the console confirming its success.

With my service worker written and the file referenced in index.html, I’m ready to deploy this site and see the results.

For a simple, reliable SPA deployment with easy /index.html fallback policies for our static routes, we’re going to use Netlify. It also gives us https out of the box, which we’ll need to implement our service worker.

Note: If you’re following along, you’ll want to create an account on Netlify’s site and install their cli tools before continuing.

The only thing stopping the site from being ready is a simple redirect rule that lets Netlify know how to handle our static routes. To accomplish this, we’ll write a quick config file that’ll live in our static directory that gets copied into our build:

./optimized/src/static/_redirects:

/* /index.html 200To deploy the site, I build the project again and navigate to the /dist directory. Then I deploy from the CLI by running:

netlify deploy --productionIt’ll ask for the path, which defaults to the current directory. It then prints the url for the app in the terminal.

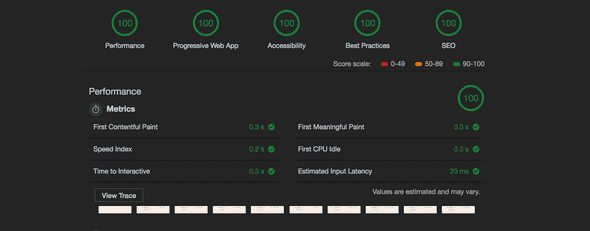

Once we navigate to the site and run the audit one last time, we see the results we’ve been ever-so patiently waiting for:

We did it 🎉!

A Note on Service Workers

Keep in mind that service workers have their drawbacks, namely in cache busting. For this reason, it’s common practice to add a hash value to your resources to easily deregister outdated files.

In Webpack, this is as easy as adding the [hash] keyword to your bundle names in the output section.

Ex:

// ...

output: {

filename: '[name].[hash].bundle.js',

chunkFilename: '[name].[hash].bundle.js',

path: resolve(__dirname, 'dist'),

},

// ...Then, to help automate the process of service worker configuration, I would recommend the SW Precache Webpack Plugin (thorough documentation provided in their linked GitHub page).

Wrapping Up

With a few modifications to our original project, we were able to decrease the page load time, optimize our caching strategy and handle offline requests.

Thanks to the minification, bundling, and code splitting capabilities of Webpack—paired with a service worker with sensible configs—we can ship a JavaScript-powered web app without the bloat plaguing so many projects in the space.

Although we touched on several options in this article, there are still plenty of ways to push the performance envelope even further. Below are a few articles that go into more detail, as well as offer additional performance tweaks:

As always, thanks for reading.

alephnode

a blog about javascript, node, and math musings.

twitter plug